AI data centers are revolutionizing how we manage and process data. These advanced facilities use modern technologies that boost performance and scalability, making real-time analytics possible. With advances in generative AI, and now agentic AI, solidifying the role of AI as a central driver of innovation and operational efficiency, understanding the unique features and benefits of AI data centers is essential. This article explores what an AI data center is, its architecture, and the role it plays in shaping the future of data management.

AI data centers: A new era of data management

What is an AI data center?

An AI data center is a specialized facility equipped to support artificial intelligence workloads, which require a combination of high computational power, massive data throughput and storage scalability, and energy efficiency measures to offset energy consumption. Unlike regular data centers, AI facilities are optimized for high-performance computing tasks, allowing quick data processing and analysis. They feature advanced hardware setups, such as GPUs and TPUs, which enhance the performance of machine learning and deep learning models. With these technologies, AI data centers ensure seamless data transfer and real-time processing.

AI data center architecture incorporates software-defined networking, unified network security measures, and hyperconverged infrastructure. These and other advanced solutions create adaptable environments for AI workloads, protect user data, and deliver edge computing and inferencing capabilities. Dynamic resource allocation and support for scaling operations are also needed. Automated tools help streamline workflows, reduce deployment times for AI models, and improve data management efficiency.

AI facilities face unique data processing and storage requirements. Robust storage solutions are vital to handle the rapid data growth from AI applications. AI data center infrastructure must ensure high availability and reliability, to make data easy to access for training and inferencing tasks. Smart data management strategies optimize performance while reducing costs, allowing organizations to fully leverage AI and gain a competitive edge.

What are the different kinds of AI data centers?

Types of AI data center deployment models

AI data centers come in three primary deployment models:

- On-premises data centers provide organizations with complete control over their hardware and data center security, suitable for those with strict compliance needs.

- Cloud-based AI facilities offer scalability and flexibility, allowing businesses to adjust quickly to evolving AI workloads.

- Hybrid AI data center deployments combine the scalability and flexibility of cloud resources with the control, security, and low-latency performance of on-premises infrastructure. This enables organizations to optimize costs, meet compliance requirements, and support diverse workloads.

Physical infrastructure for AI data centers

Hyperscale and colocation infrastructure strategies are key in the evolving landscape of AI data centers. Hyperscale centers support large-scale operations typical of major cloud service providers, focusing on efficiency and scalability to manage vast data volumes. Colocation centers offer shared space, power, and cooling for multiple clients, helping to provide flexibility and cost savings for businesses not needing hyperscale solutions.

Each of these infrastructure strategies supports distinct needs of AI applications. Hyperscale facilities suit companies needing extensive processing power for AI training models, while colocation facilities cater to smaller-scale AI-driven applications, benefiting from shared infrastructure.

How are AI data centers unique?

As businesses turn to AI to enhance operations and decision making, understanding the differences between AI data centers and traditional centers is crucial.

What distinguishes AI data centers from traditional data centers?

AI data centers are built to meet the specific demands of AI workloads, featuring advanced infrastructure for high-performance computing, optimized storage solutions, and scalable networking capabilities. Traditional centers often rely on standard servers, which may struggle to handle the intensive data processing AI applications require.

AI facilities typically demonstrate superior efficiency metrics, using technologies like GPU acceleration to boost processing speeds for complex algorithms. They employ intelligent resource management systems to dynamically allocate resources based on workload needs, latency reduction, and throughput control. In contrast, traditional centers may encounter performance bottlenecks due to their rigid architectures.

Cost and operational considerations further distinguish these centers. While traditional centers may have lower initial setup costs, they often incur higher operational expenses over time due to inefficiencies. AI data centers, though require higher upfront investment, can provide long-term savings. AI facilities need to optimize resource usage and streamline maintenance to be more energy efficient.

What are the security challenges specific to AI data centers?

When securing an AI data center, it’s important to be mindful of several unique security challenges. These include:

- Data privacy and protection—Vast amounts of sensitive data used to train AI models can increase the risk of breaches or unauthorized access.

- Increased attack surface—The integration of GPUs, AI accelerators, and distributed systems demands more robust security measures.

- Model theft and tampering—AI models are vulnerable to intellectual theft, reverse engineering, or manipulation during training and deployment.

- Scalability of security—The compute-intensive and dynamic nature of AI workloads requires a security solution that can scale without compromising performance.

- Supply chain vulnerabilities—Hardware and software dependencies in AI infrastructure may expose components to potential security risks.

- Compliance challenges—The scale and sensitivity of AI workloads increases the complexity of ensuring compliance with evolving data protection regulations.

An on-premises or hybrid AI data center provides advantages for addressing these security and data privacy challenges, making it easier to:

- Maintain full data control—With on-premises deployments, organizations maintain control and ownership over their AI data and models, ensuring they remain within a secure internal environment.

- Minimize the attack surface—Hosting AI workloads and data internally reduces exposure to interception or unauthorized access during data transmission over public networks.

- Tailor security policies—On-premises setups allow for customized security measures such as network segmentation, strict access controls, and advanced monitoring.

- Comply with regulations—Many industries mandate data residency and privacy standards that are easier to enforce with on-premises deployments.

- Leverage existing security infrastructure—Organizations can integrate AI workloads with their firewalls, identify management solutions, and other current security tools to maintain a unified security posture.

Forecasts for AI data centers

The rise of AI-driven solutions is reshaping the data center landscape, with trends showing a surge in demand. Businesses across sectors use AI technologies to enhance operations and innovate. Studies project exponential growth in the global AI data center market, driven by the need for faster processing and real-time analytics.

As AI's role expands, it influences the design and architecture of future data centers. Companies are rethinking infrastructure to accommodate AI workloads needing high computational power and low-latency connectivity. Data center modernization ensures facilities can handle current AI demands and are future-proofed for advancements.

Are AI data centers sustainable?

The GPUs that power AI data centers consume 10 to 15 times more power per processing cycle than CPUs that power traditional data centers. This makes efficiency critical for AI data center design, with a focus on energy consumption and environmental impact. Organizations seek solutions promoting efficiency, aligning AI initiatives with sustainability goals for resilient data center management. With AI data center advanced energy strategies can be employed, such as optimizing power use, using clean energy sources, and improving cooling efficiency. These efforts can help meet increasing demand for AI data centers while aligning with regulations and sourcing adequate energy.

The future of AI data centers

The evolution of AI data centers is driven by new technologies that promise to redefine data processing and management. Agentic AI, for example, is ushering in a wave of intelligent, autonomous agents capable of automating entire workflows and solving complex problems.

As reliance on AI grows, next-generation centers must accommodate massive data volumes while delivering speed and flexibility. Innovations like advanced cooling technologies, energy-efficient hardware, and edge computing pave the way for cost-effective and scalable AI-centric infrastructures.

How is AI driving the growth of data centers?

Advancements in AI technology will further expand data center capabilities. Sophisticated machine learning algorithms will improve predictive analytics, helping to optimize resource allocation and reduce downtime. Faster data transfer rates from emerging technologies will enhance real-time processing capabilities, which are essential for AI applications.

The implications for businesses are significant. AI data centers empower organizations to harness data effectively, driving insights that enhance user and customer experiences as well as operational efficiencies. Adopting advanced AI solutions allows companies to automate routine tasks, freeing resources for strategic initiatives. The future of AI data centers is promising, offering transformative opportunities.

Preparing the data center for AI

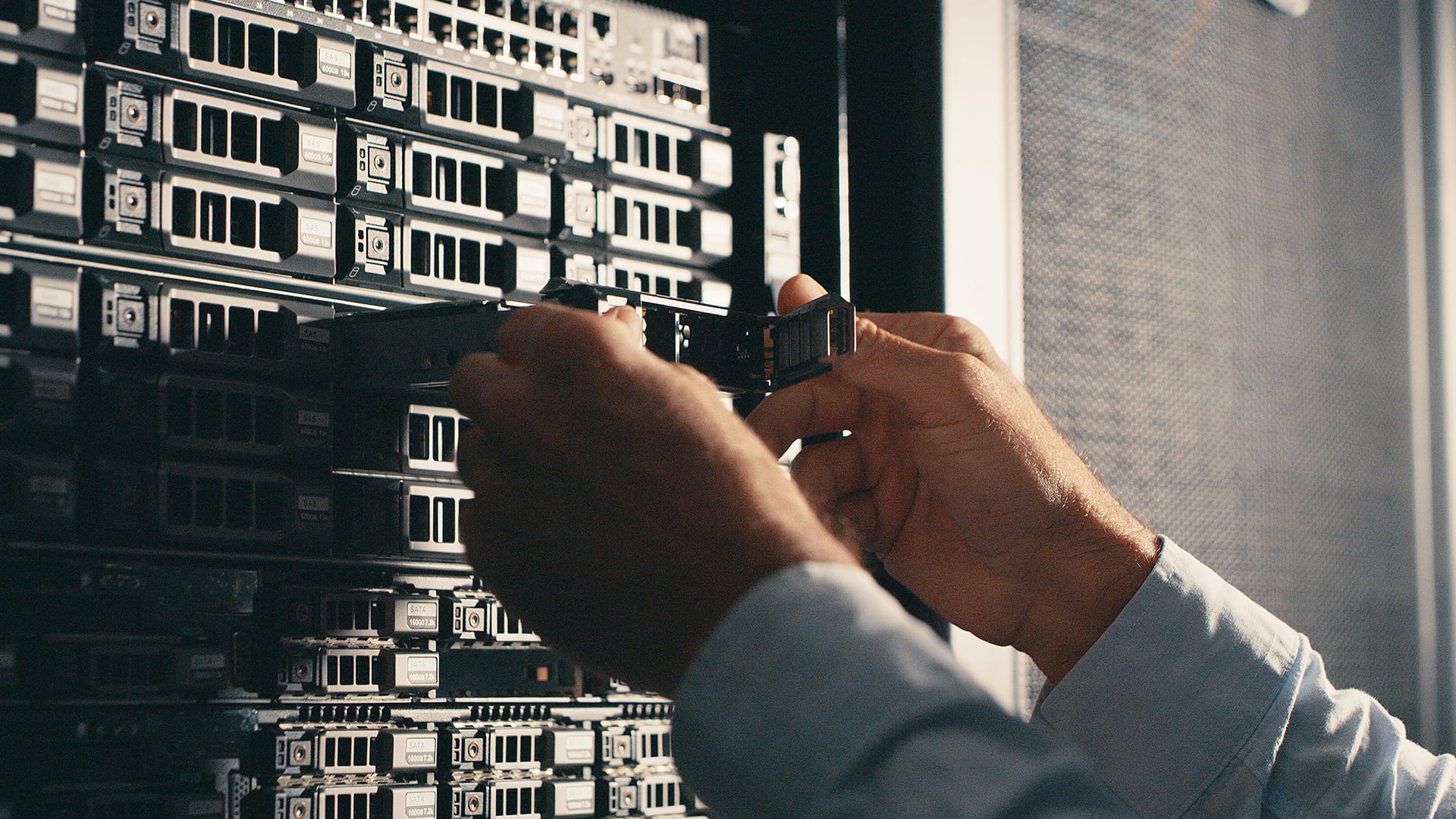

As businesses increasingly turn to AI to drive innovation, it is more critical than ever to ensure that the data center infrastructure is ready for AI. Deploying AI in a data center presents unique challenges, including the need for high-speed connectivity and scalability to handle massive datasets.

Creating an AI-ready data center requires an investment in cutting-edge infrastructure with integrated systems and cloud capabilities. Solutions for AI data centers should be designed with AI in mind, offering seamless integration of hardware and software to ensure optimal performance. They should also prioritize robust security measures to protect sensitive AI workloads and data throughout the lifecycle. AI-ready data center infrastructure should not only support the latest AI technologies but also provide flexibility to evolve as organizational needs change.